Google released a snapshot of the steps that it will be expecting its search experts to follow just before Christmas. The seven-page document is far from an insight into the inner-sanctums of the search engine’s voice algorithms, but it does at least give a top-line flavour of the sorts of results that Google is looking to deliver through what it terms “eyes-free” devices – things such as its Google Assistant-powered smartphone apps, smart speakers and personal assistants.

Notably, the document only gives examples of non-commercial searches, but it is likely that the principles that apply to commercial searches will mirror the standards applied to non-commercial and informational searches – at least initially.

So what are the key takeaways?

Does the answer “meet the need”?

The acid test for any search result is whether it meets the needs of the user. This, of course, is as true as it is for voice search as it is for a desktop or mobile search, but determining whether an answer meets the need without a visual aid requires a slightly different approach.

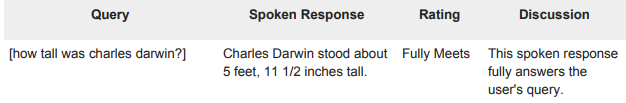

The guidelines gives some examples of what would meet a suitable answer, to varying degrees of success. Simple queries have very predictable responses, for example:

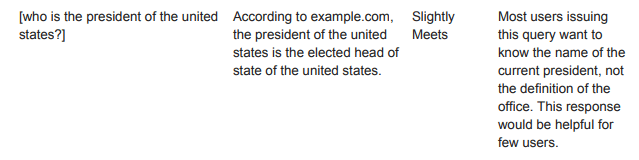

However, queries where there are potential ‘double meanings’ are slightly more complicated. One example given is the query “who is the president of the United States?”.

Now in a visual query, this result would present various pieces of information in knowledge boxes and schemas showing facts about the President of the United States, photos and fact files, but this wouldn’t work in a voice result.

And this becomes a problem where the question could have multiple answers, or where the answer may change on a regular basis. For example, the example answer to that question is:

Whilst the answer correctly explains the role of the President of the United States, it is likely that the user is looking for the name of the current President, not information on the roll itself. However, this exposes a limitation of voice search, and how voice has to assume a particular need from the user.

Length, formulation and elocution all matter

The other key consideration will be what Google describes as the “speech quality” of the answer; essentially a rating of how the answer is delivered to the user. For this, there are three elements that are taken into consideration; length, formulation and elocution.

When judging length, Google is looking to deliver answers that address the likely demands of the query, without delivering a response that excessively long or detailed. Raters will be asked to determine whether the response is an appropriate length for the complexity of the query, and whether there are more concise ways in which the information could be delivered.

When judging formulation, raters will be asked to consider whether the response sounds ‘natural’, and will consider elements such as grammar, language and in the cases where a source is cited, whether that source is clear and credible.

In this example, the answer would not sound natural due to the erroneous “however”:

Elocution will focus on pronunciation. Whilst computerised voices have improved significantly in this regard, there are still issues with automated voices mispronouncing certain words – particularly names and places. Google search raters will be looking for this, as well as whether the answer was delivered at a natural pace, and whether there are any awkward sentence constructs that could be avoided – such as unnecessary sibilance or tongue twisters.